OnDemand#

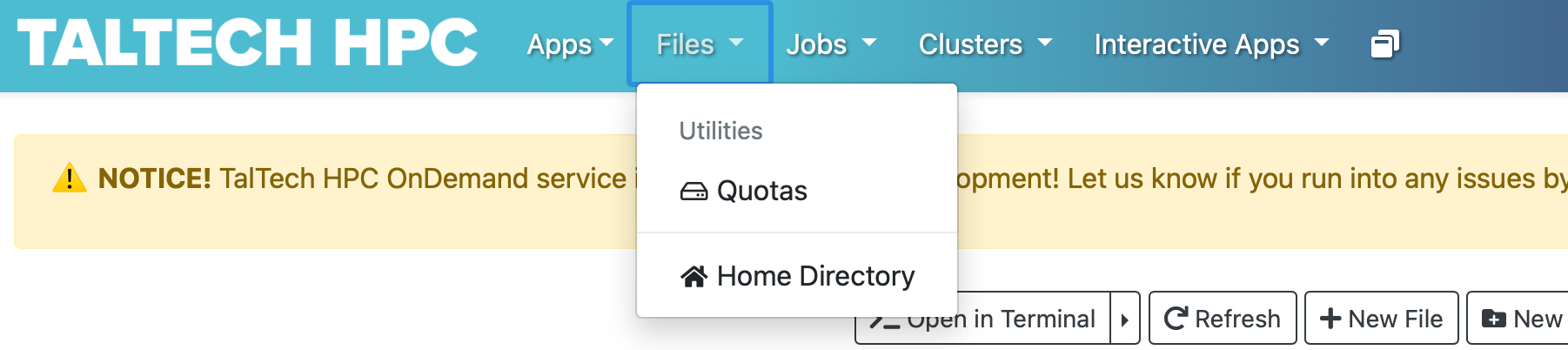

OnDemand available at ondemand.hpc.taltech.ee, is a graphical user interface that allows access to HPC via a web browser. Within the OnDemand environment users can:

-

run Desktop session ( -> TalTech HPC Desktop)

-

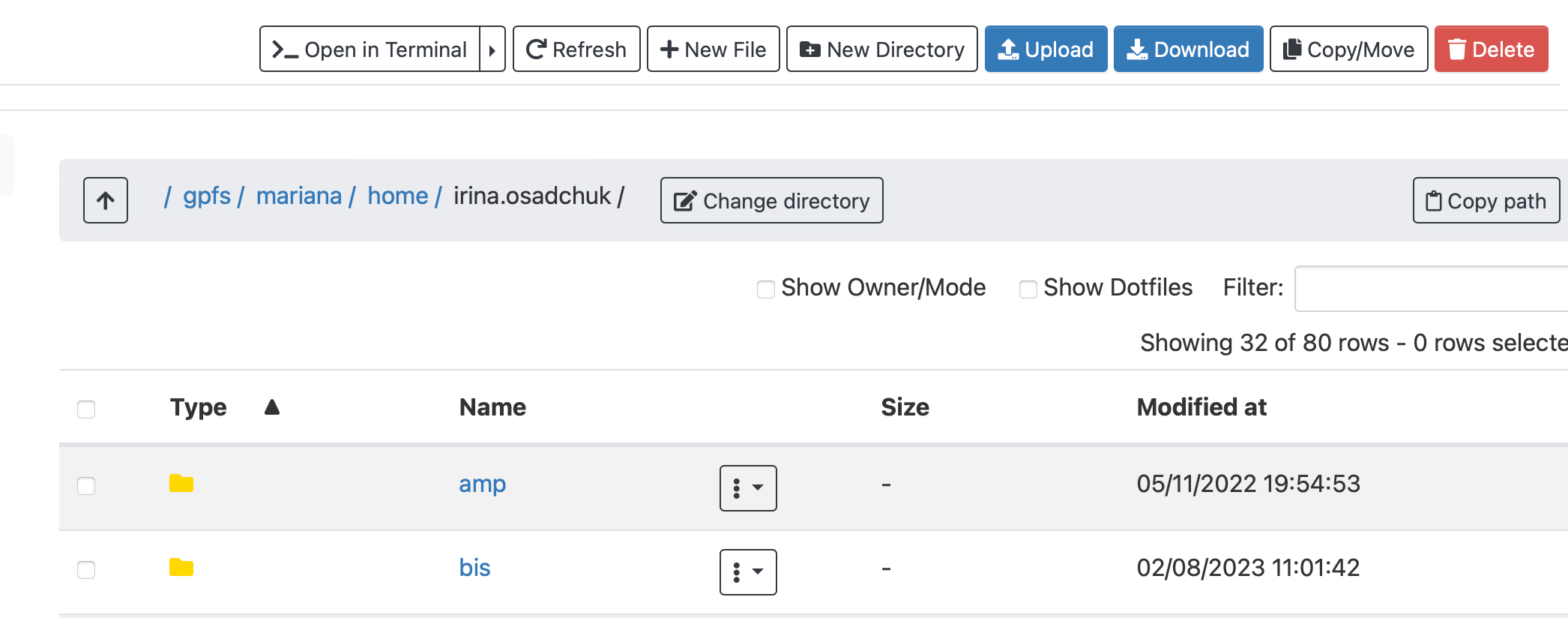

access to HPC files ( -> Home directory)

-

upload, download and delete files ( -> Home directory)

-

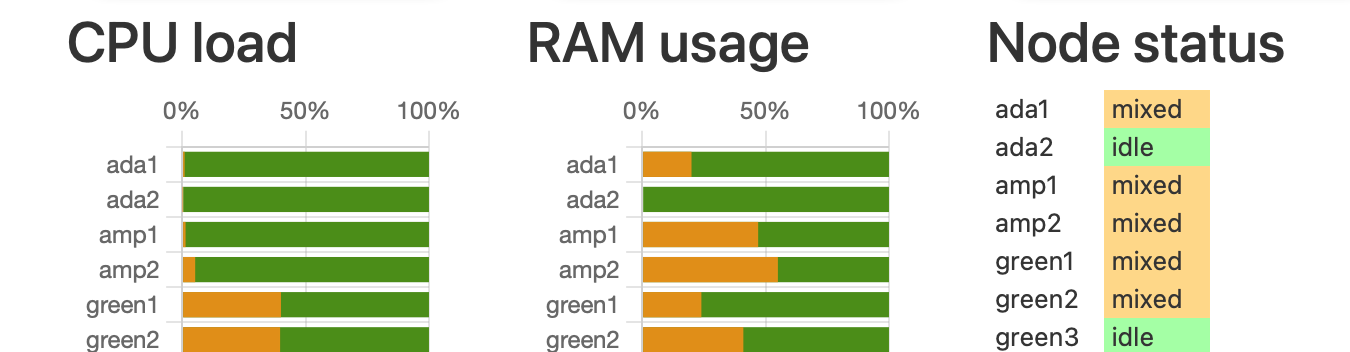

check nodes load

-

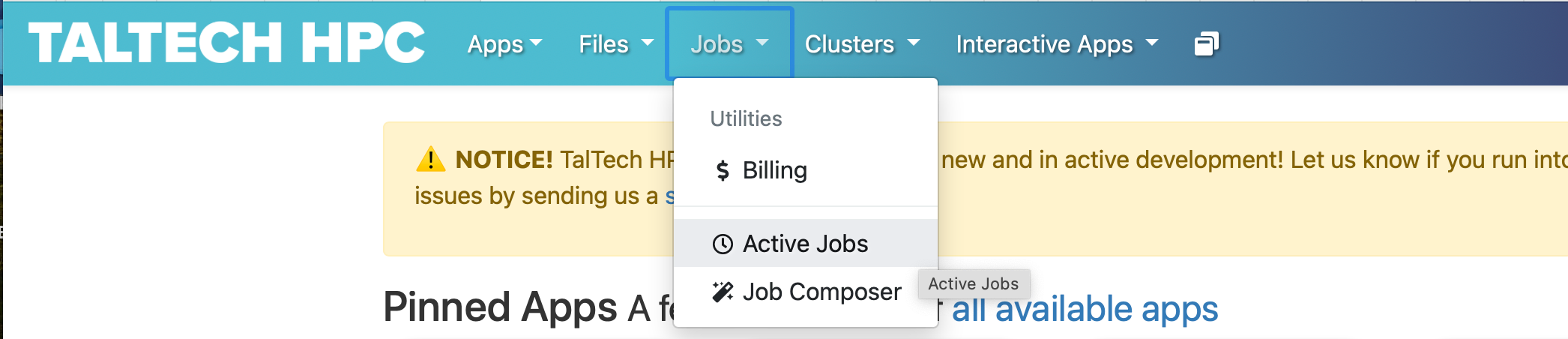

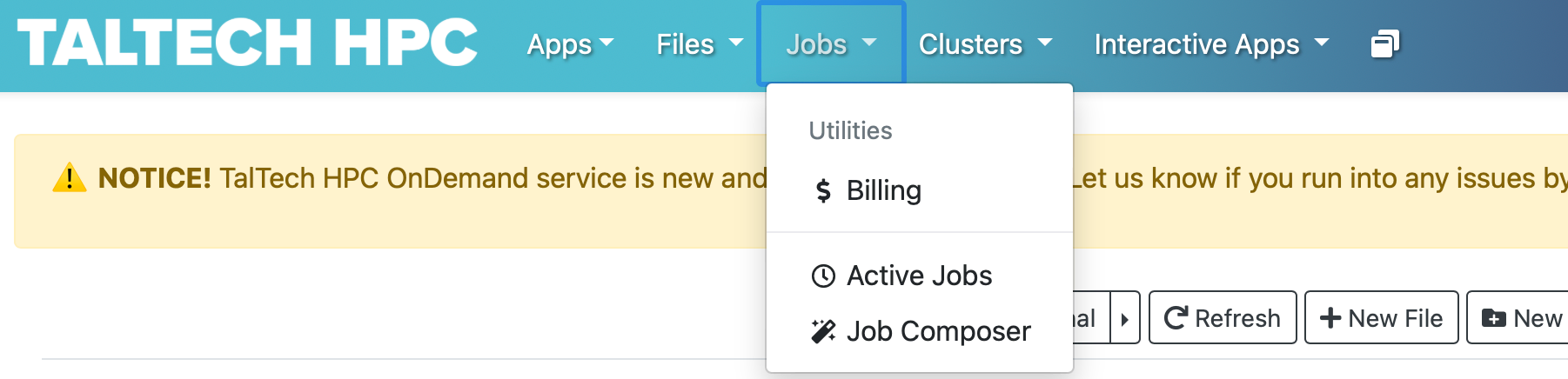

monitor and cancel own jobs

-

check own filesystem quotas

-

check own bills

-

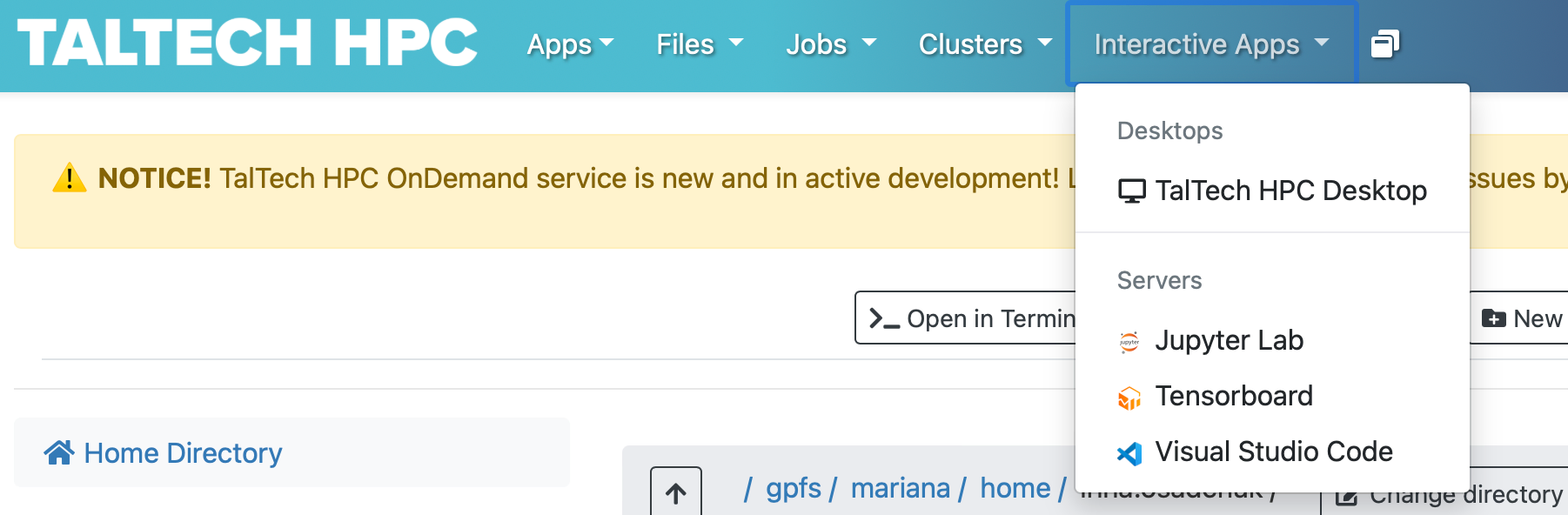

run interactive applications like Jupyter

The default desktop environment is xfce, which is configurable, lightweight and fast.

The menu only contain very few programs from the operating system. However, all installed software can be open an XTerminal using the module system as you would from the command-line.

Job Composer#

The typical workflow with the job composer is the following.

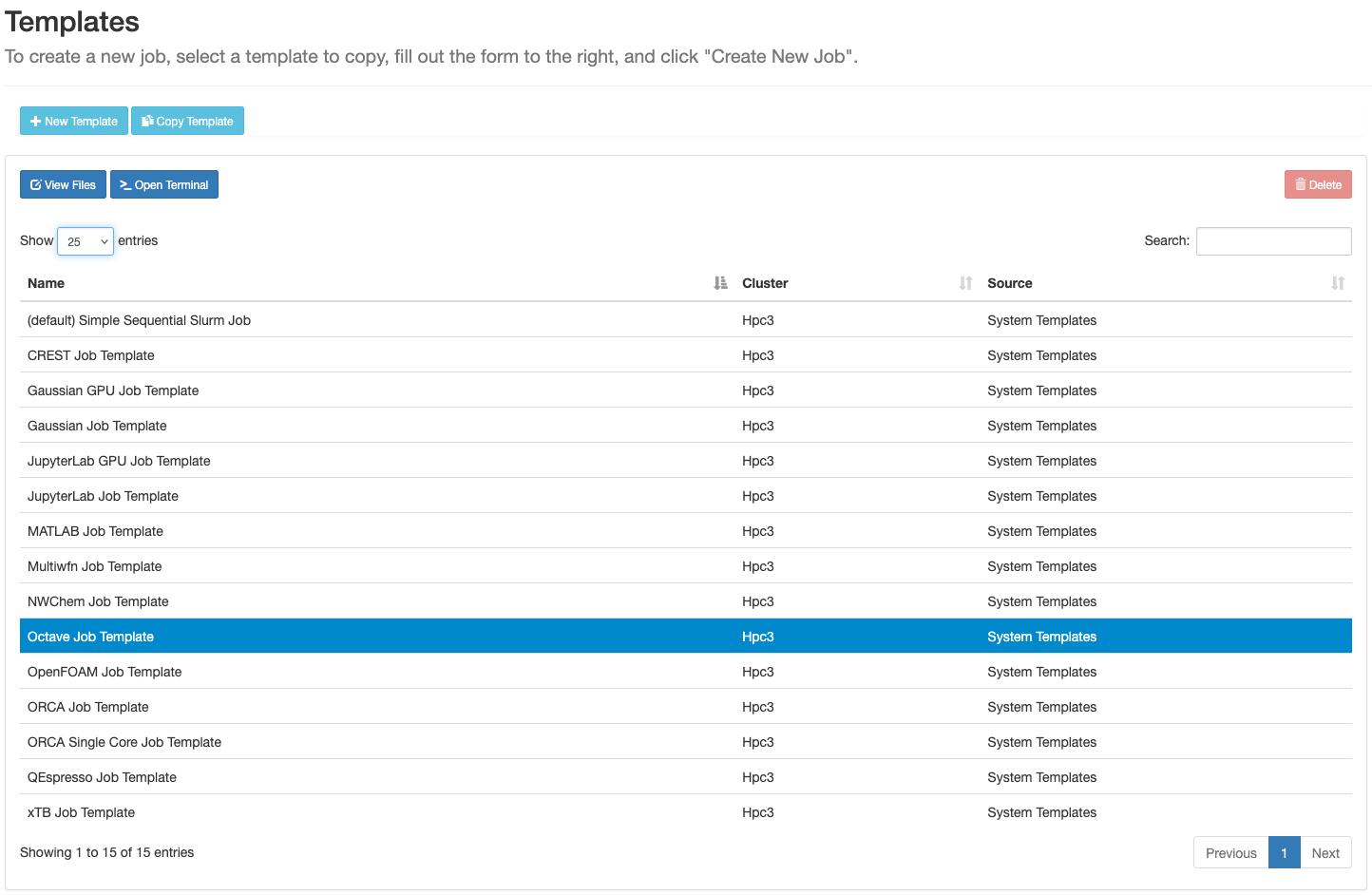

- Navigate to the job templates in the Job Composer by ondemand.hpc.taltech.ee > Utilities > Job Composer > Templates or directly via this URL

- Select the software template you intend to use; if there is no template for the software you wish to use, let us know!

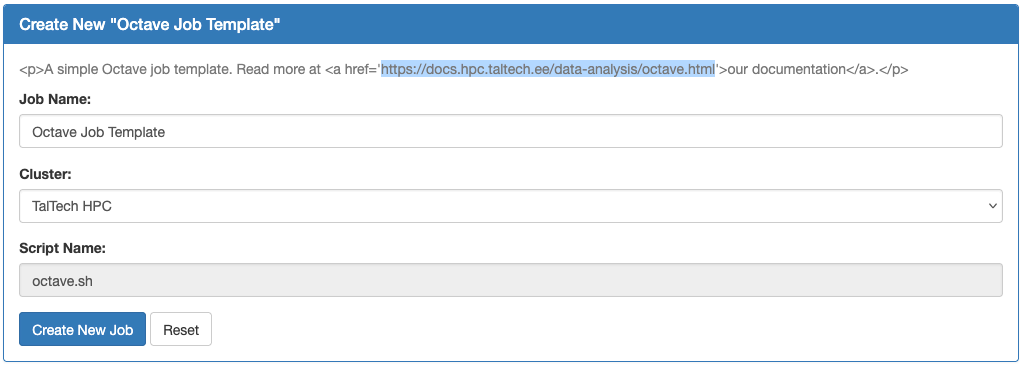

- Optionally visit the additional documentation, then create a new job with that template

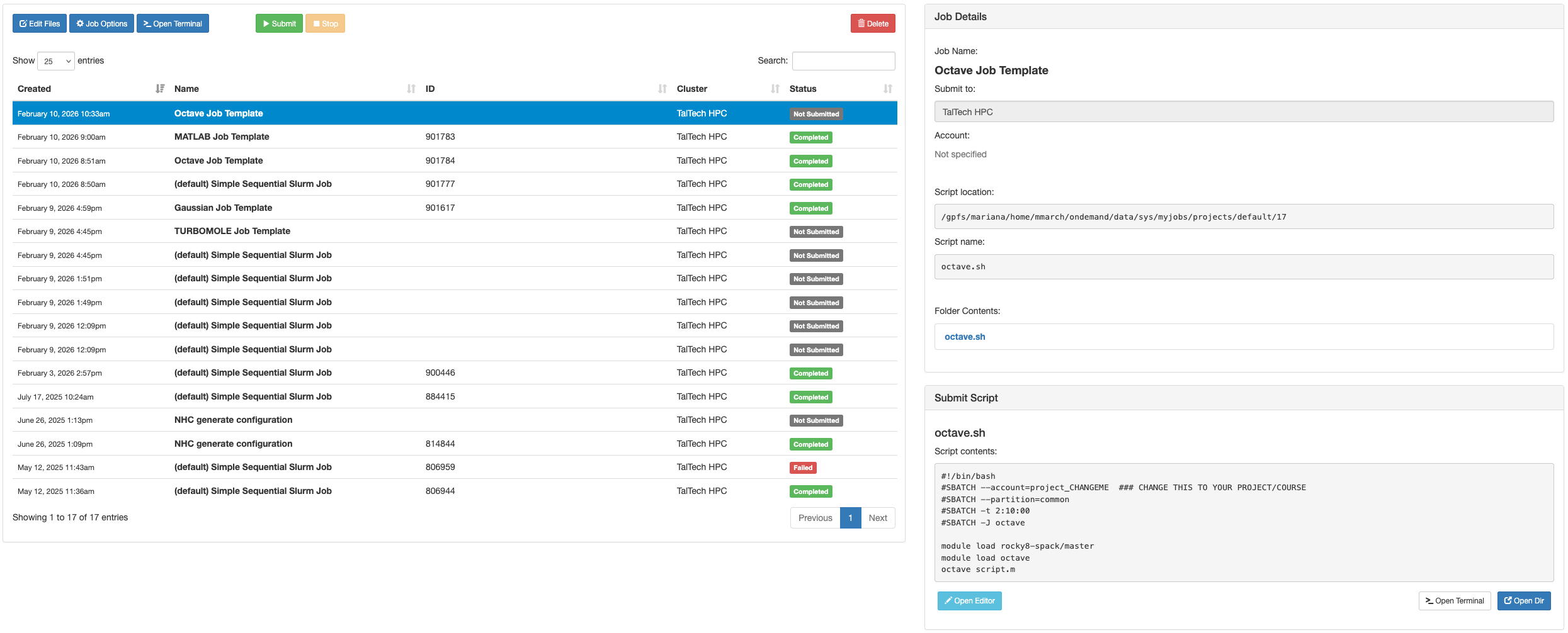

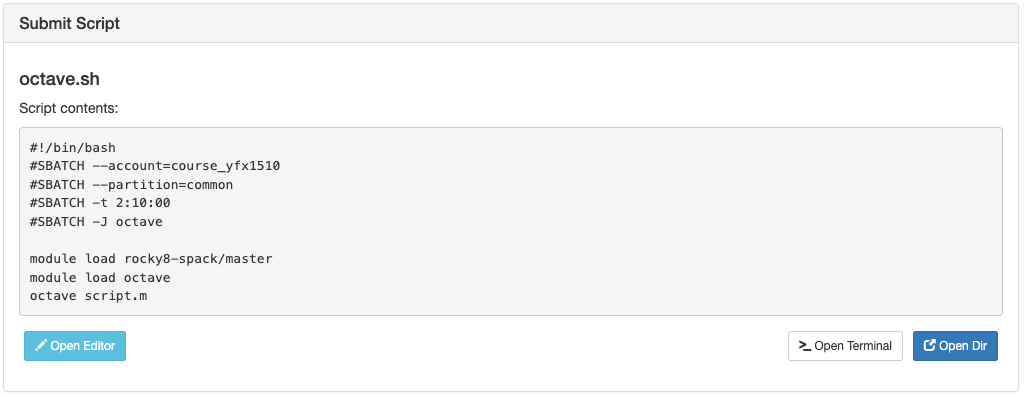

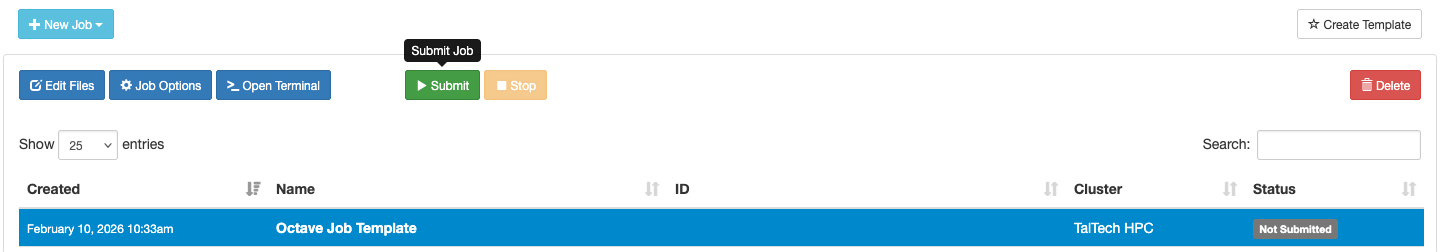

- See the newly created, yet unsubmitted job and edit it

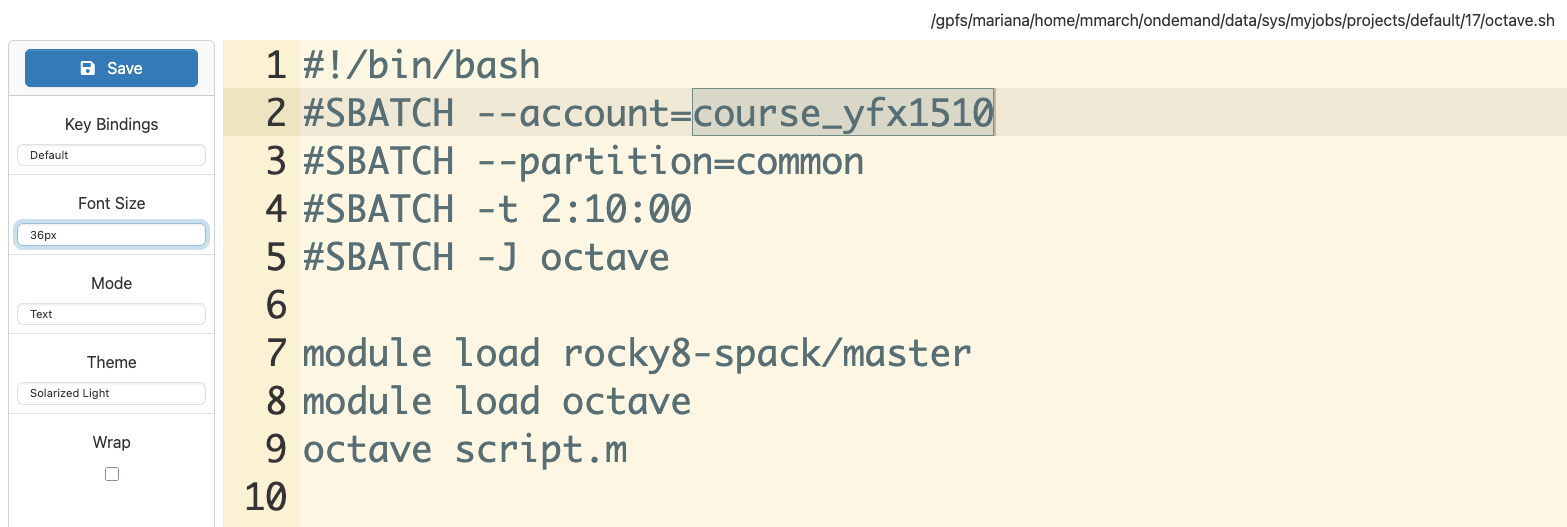

- Make your own desired job by editing the template, then go back to the previous tab; make sure to specify the correct account; if you have access to none, remove the line or use the default

user_<username>

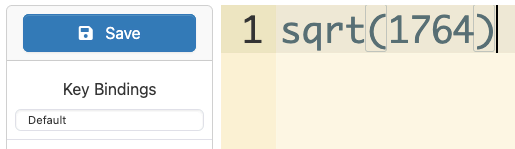

- Open the directory to create other files that you might need before running the job, such as configurations and input

- Once created the file with the appropriate name, edit it; repeat for all files you need, then go back to the previous tab

- Submit your job; you will be able to follow its progress from the small status badge or from the Active Jobs utility

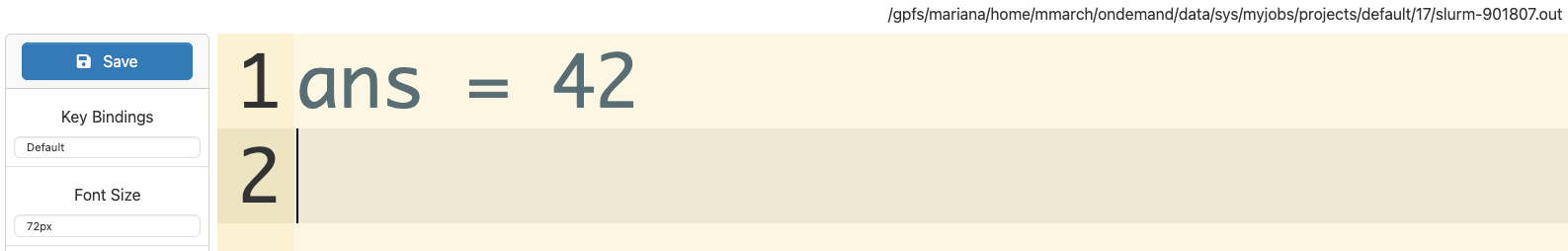

- Once the job is over, in the same directory that you edited before, you will find the output (typically both stderr and stdout) in the specified file (typically

*.out)

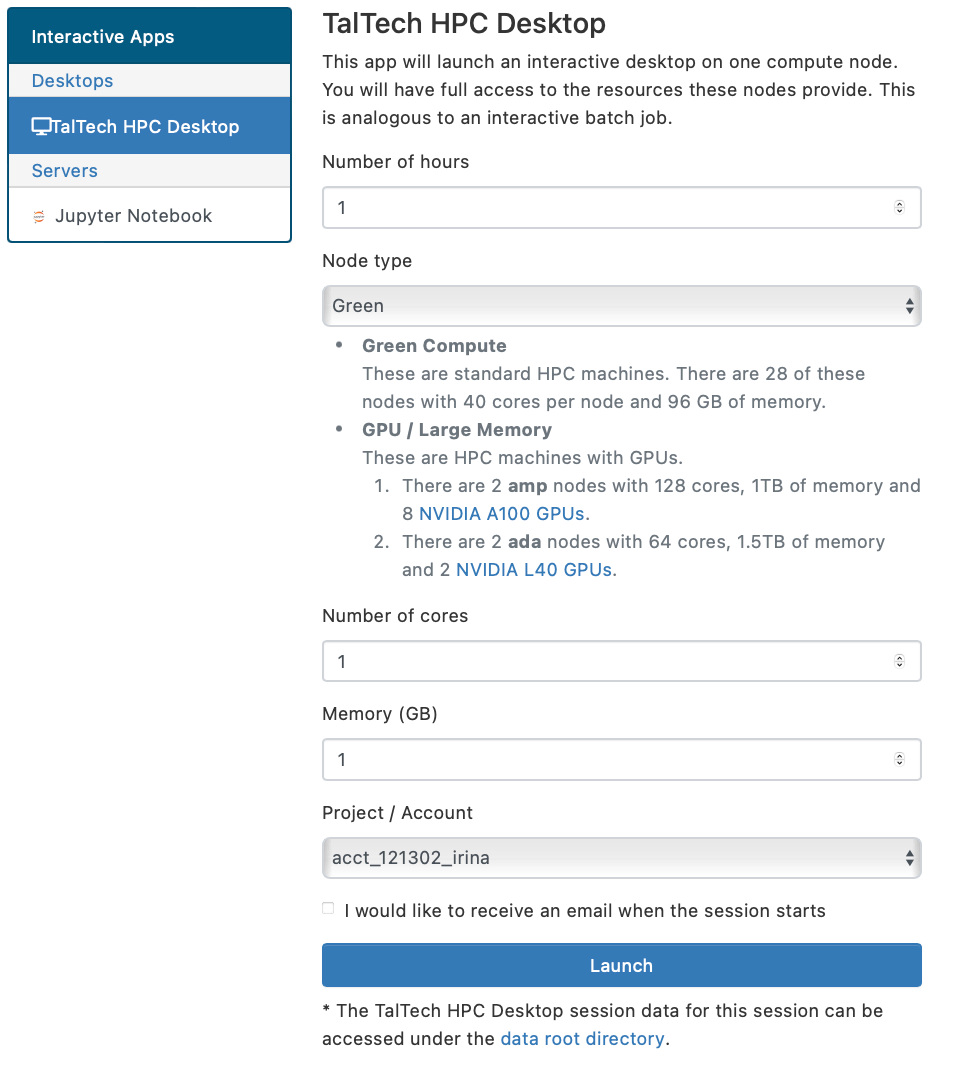

OnDemand Desktop#

-

Choose "TalTech HPC Desktop".

-

Set up and launch an interactive desktop (time, number and type of cores (CPU=green, GPU), memory). 1 core and 1 GB of memory is usually enough if no calculations are planned.

NB! Check your account.

-

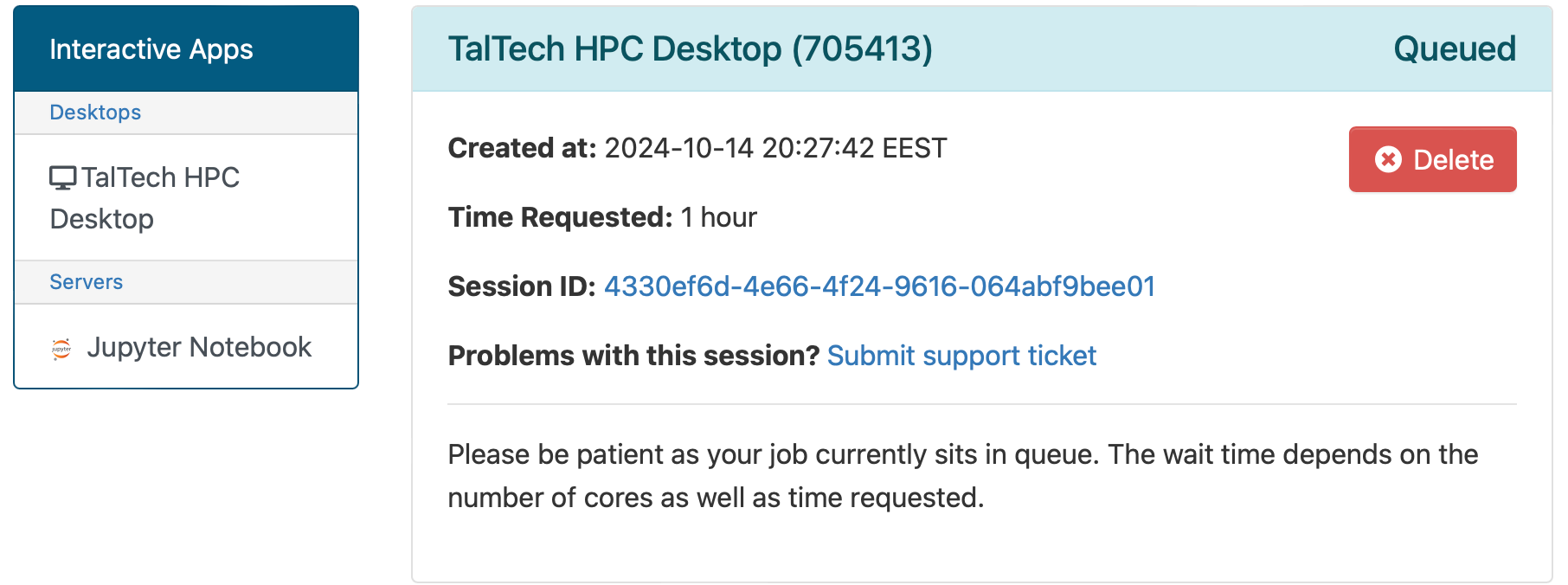

Firstly, your request will be put into a queue and this picture will appear.

-

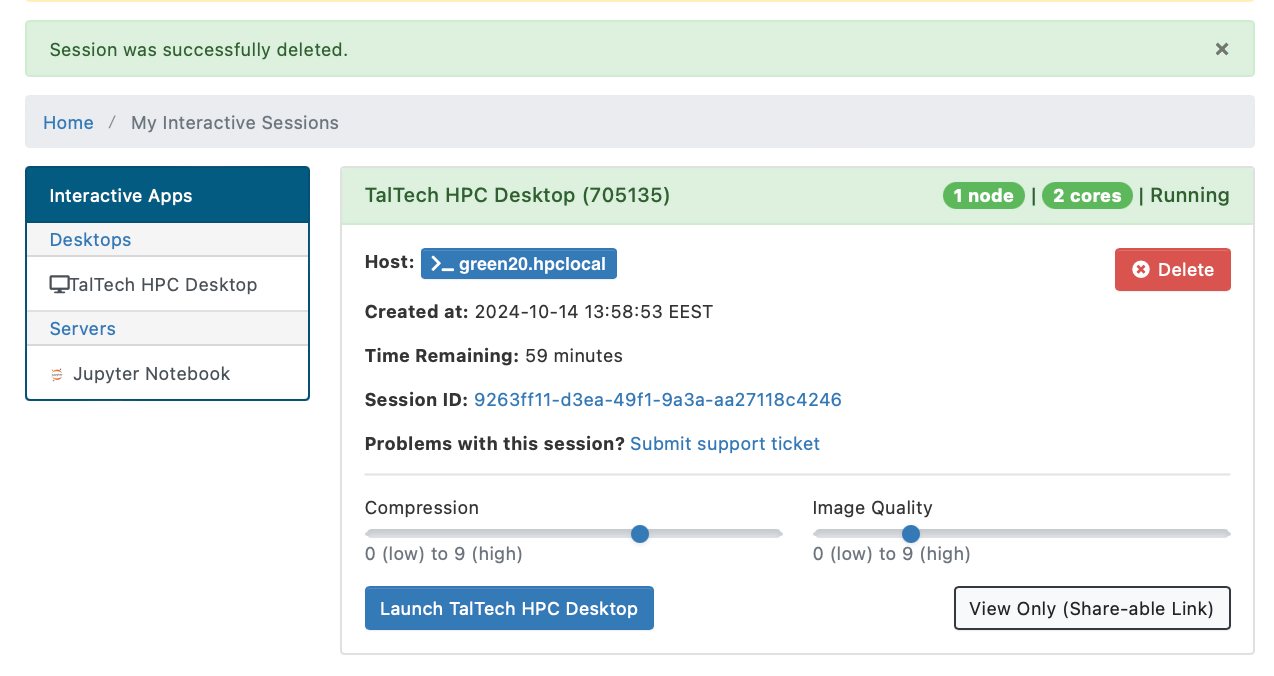

When needed resources will become available, your session will start and this picture will appear.

We recommend to use default settings for "Compression" and "Image Quality", unless you require high-quality screenshots.

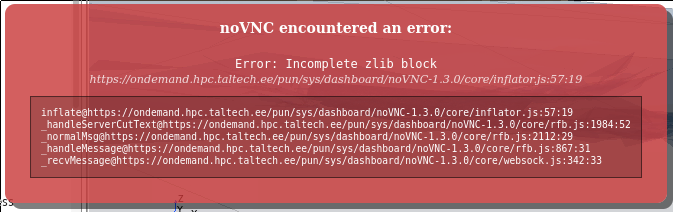

NB! Do not use quality settings "Compression 0" and/or "Image Quality 9", this will cause a zlib error message. The message box can be removed by reloading the browser tab.

-

To start interactive desktop press "Launch TalTech HPC Desktop"

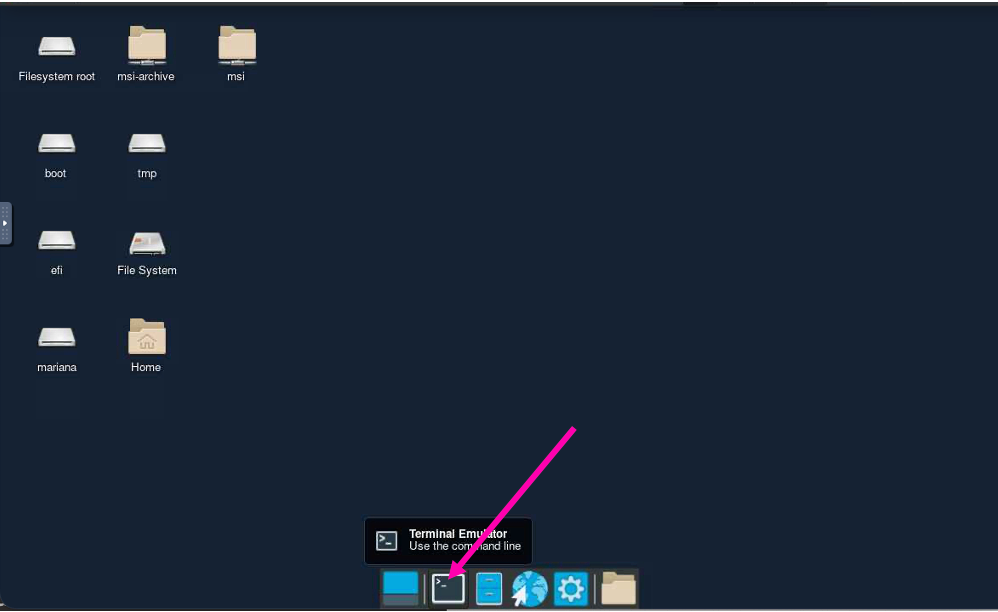

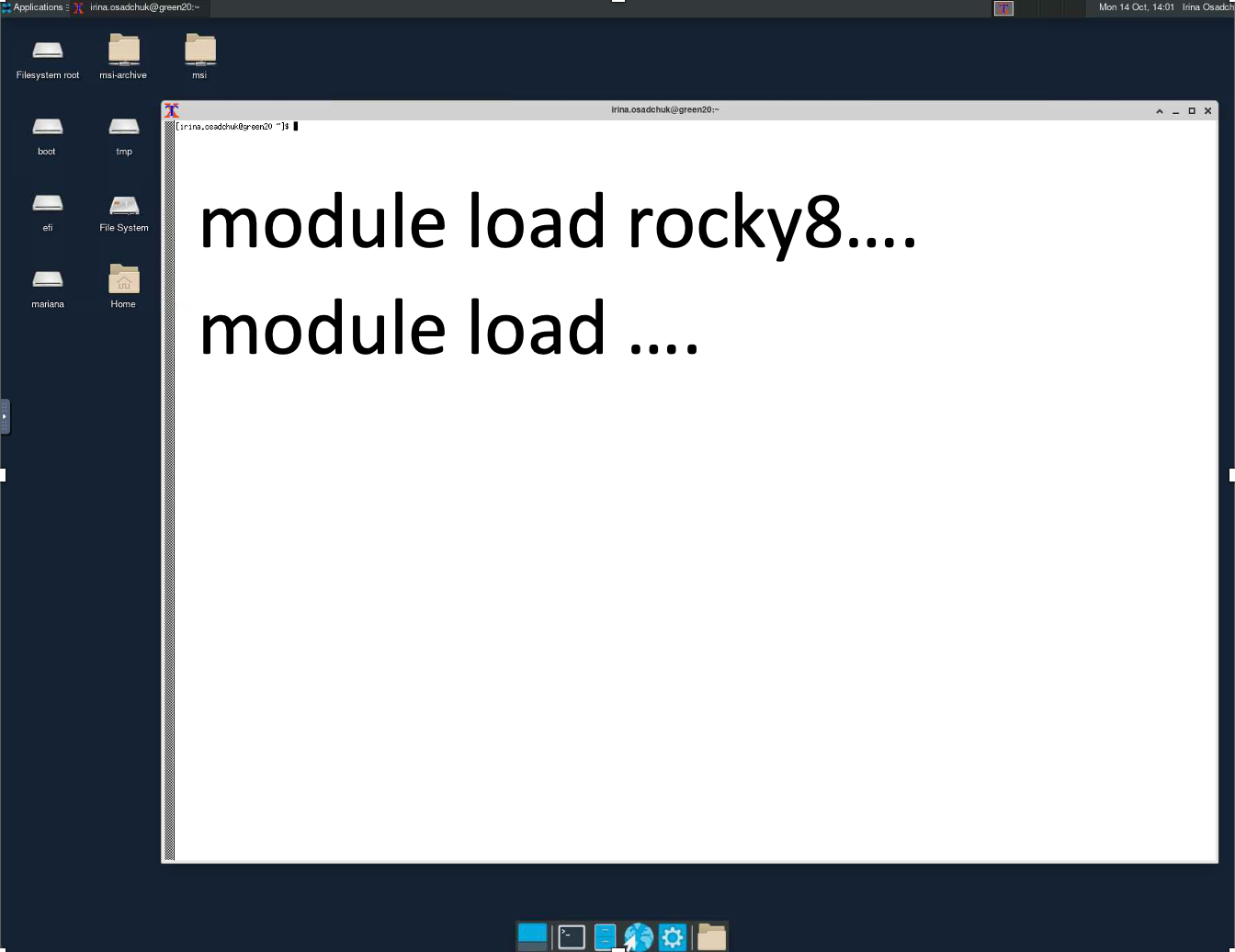

Will appear your HPC Desktop, where user can open XTerminal.

-

Load environment and program needed and start vizualization. More detailed instructions on environment and program loading are given below.

More information about using onDemand can be found at visualization page.

-

In the end of session do not forget to disconnect.

OnDemand Jupyter#

There are two ways to run Jupyter on OnDemand:

-

Using Singularity containers

- Provides a stable, pre-configured environment for Jupyter.

- You can use HPC-provided containers, but cannot install additional packages yourself. If you need extra packages, contact the HPC staff.

- Alternatively, you can build and use your own Singularity containers for more customization. See our Singularity documentation for details.

-

Using the micromamba package manager

- Offers flexibility to create and manage your own Python environments.

- You can install and update packages as needed, including JupyterLab and other Python libraries.

- This method may require more setup and maintenance, and environments are user-managed.

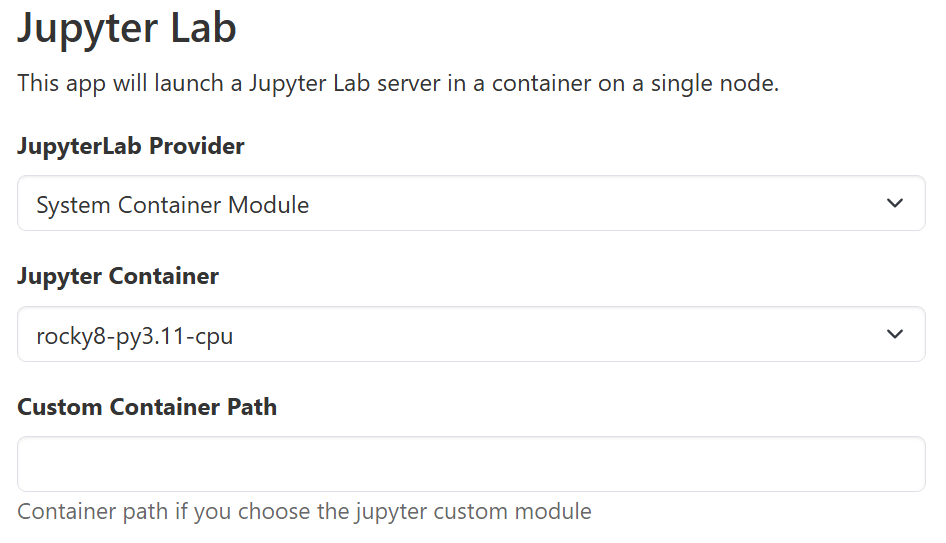

OnDemand Jupyter using containers#

- You can use HPC provided pre-built Singularity containers

- Using this, you can not install additional packages yourself. But you are encouraged to ask the staff to add packages you need.

- Using your own Singularity containers.

- Follow our documentation on obtaining and building Singularity containers to find out how.

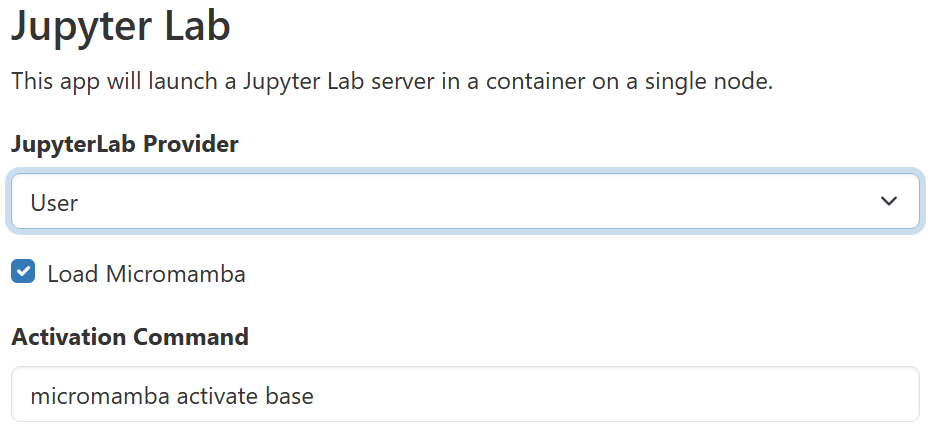

OnDemand Jupyter using the micromamba package manager#

- Before using this on the web interface, you must configure the environment by logging in to base using command line (you may do this by using the OnDemand Desktop app or logging in via SSH).

- From there load the micromamba module:

module load rocky8 micromamba - Then, install Jupyter Lab:

micromamba install jupyterlab - Navigate back to OnDemand Jupyter app on your browser. Choose

Useras theJupyterLab Provideroption, and setmicromamba activate baseas theActivation command:

Note on installing pip packages using Jupyter with micromamba:

When working inside a Jupyter notebook, use %pip (not !pip) to install Python packages. The %pip magic function ensures packages are installed in the correct environment for your notebook.